- Nov 2025: THUNDER has been accepted to 3DV 2026!

- Oct 2025: CLUTCH, our work on 3D hand animation with LLMs is out.

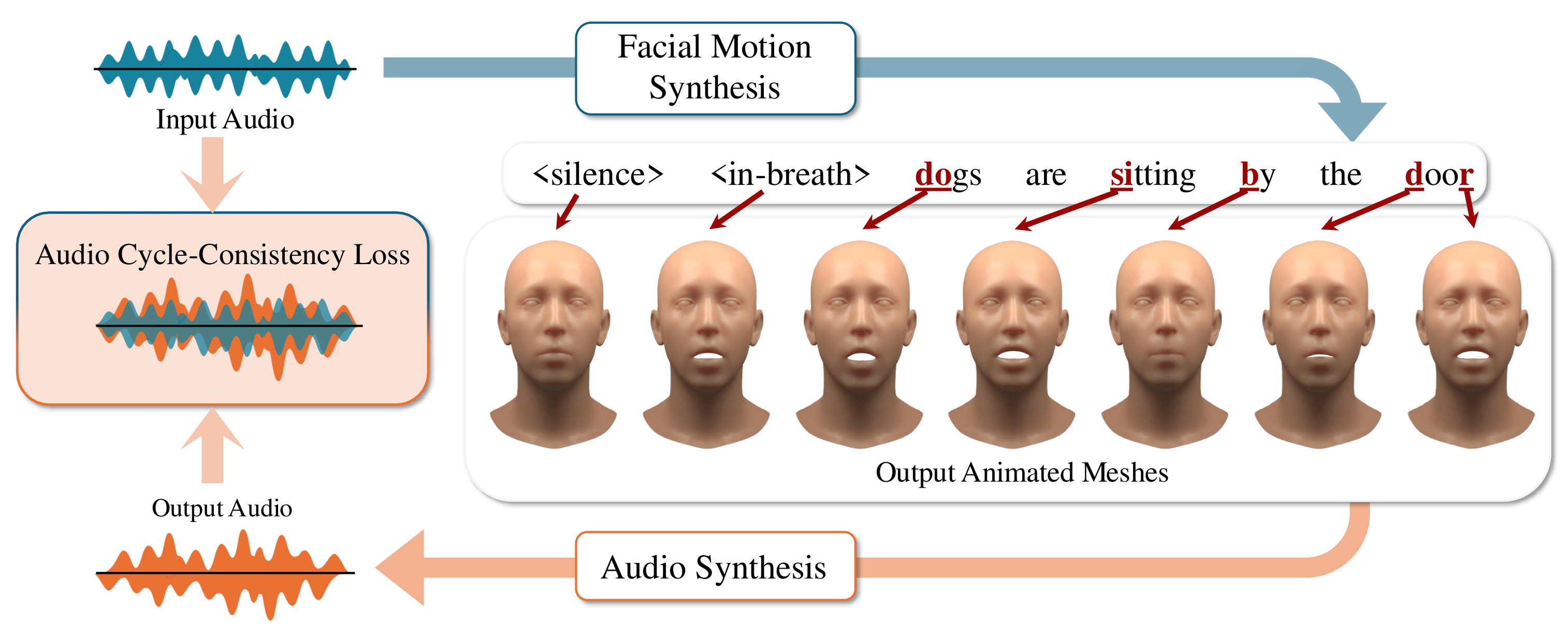

- Apr 2025: THUNDER, our work on self-supervised 3D talking head avatars, is live on arXiv.

- Dec 2024: Started a part-time Research Internship at Amazon Tübingen, continuing our work on 3D avatars.

- Oct 2024: SMIRK and Emotional Speech-driven 3D Body Animation accepted to CVPR 2024!

- Nov 2023: Began full-time Research Internship at Amazon Sunnyvale working on speech-driven 3D avatars.

- Dec 2023: EMOTE, our SIGGRAPH Asia 2023 paper on emotional speech-driven facial animation, is now online!

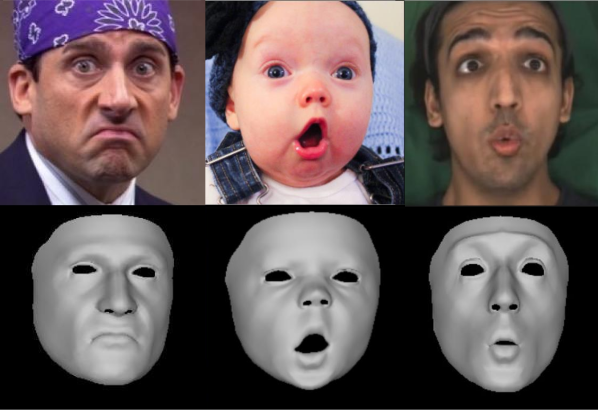

- Jun 2022: EMOCA presented at CVPR 2022. We show how to reconstruct expressive 3D faces in-the-wild.

- Nov 2020: Started my PhD under Michael Black and Timo Bolkart in the Perceiving Systems department at the Max Planck Institute for Intelligent Systems, Tübingen.

- May 2017: DeepGarment (my Master’s Thesis project) presented at Eurographics 2017.

Radek Daněček

Hi, my name is Radek and I’m an expert in making emotional faces.

OK, let me explain — I’m a scientist working at the intersection of computer graphics, computer vision, and machine learning, with a particular obsession for 3D faces. My PhD focuses on reconstructing 3D faces from arbitrary videos or images, and then bringing them to life through animation.

In particular in my research I focused on the perceptual quality of these reconstructions and animation — that is, how real or emotionally convincing they feel to us humans. After all, we want the future generations of AI to have a face that we can relate to, right? (I mean, we’re not there yet, but I’m working on it.)

I’m currently in the final stages of my PhD at the Perceiving Systems department of the Max Planck Institute for Intelligent Systems in Tübingen, Germany, where I’m fortunate to be supervised by Michael Black and Timo Bolkart.

Along the way, I’ve had the pleasure of spending time at Amazon, Trinity College Dublin, and BarcelonaTech.

Before that, I worked with Disney Research Zurich on 3D face capture, and with Vizrt Switzerland on human tracking for sports broadcasting.

I hold a Master’s degree in Visual Computing from ETH Zurich and a Bachelor’s in Computer Science from the Czech Technical University.

In my free time I usually look for creative ways to get injured such as skiing, snowkiting, kiteboarding, mountaineering, multi-day trekking or Brazilian jiu-jitsu.